Case Study

Re-platformwith GENERATIVE AI

Re-Platform: Cloud Migration with Generative AI

In the ever-evolving landscape of software development, the transition from legacy monolithic applications to modern microservices architectures has become a crucial step for businesses seeking agility, scalability, and efficiency. Our recent project was a testament to this transformation. We undertook the ambitious task of re-platforming a legacy monolithic application to a microservices architecture using Node.js to be deployed on AWS. We leveraged the power of Generative AI models to reduce the time and cost of the project. This blog reflects on our journey, highlighting how Generative AI not only expedited the process but also enhanced code quality, resulting in significant cost and time savings.

Opportunity

Our client, a prominent player in Financial Services, was encumbered by a monolithic application that was cumbersome to update, scale, and maintain. The challenge was to break this large, interconnected codebase into smaller, independently deployable microservices, a task daunting both in complexity and scope.

Generative AI:

Generative AI code models are revolutionizing the field of software development. These AI models can understand the syntax, semantics, and structural nuances of different coding languages. When tasked with converting code from one language to another, these models analyze the source code, preserving its logic and functionality while rewriting it in the target language. This process goes beyond simple line-by-line translation; it involves reimagining the code in the context of the new language’s idiomatic patterns and best practices. Moreover, these AI tools are continuously learning and improving, making them increasingly reliable for complex translations across emerging and established programming languages. This advancement is a productivity booster and a bridge connecting diverse technology stacks, fostering a more integrated and versatile software development landscape.

Limitations:

LLM models are great at converting individual files or writing code for concise prompts. However, we cannot dump an entire repository and expect it to work its magic. We built a migration engine ‘migratai’ based on the process outlined by Microsoft research.

Our Approach:

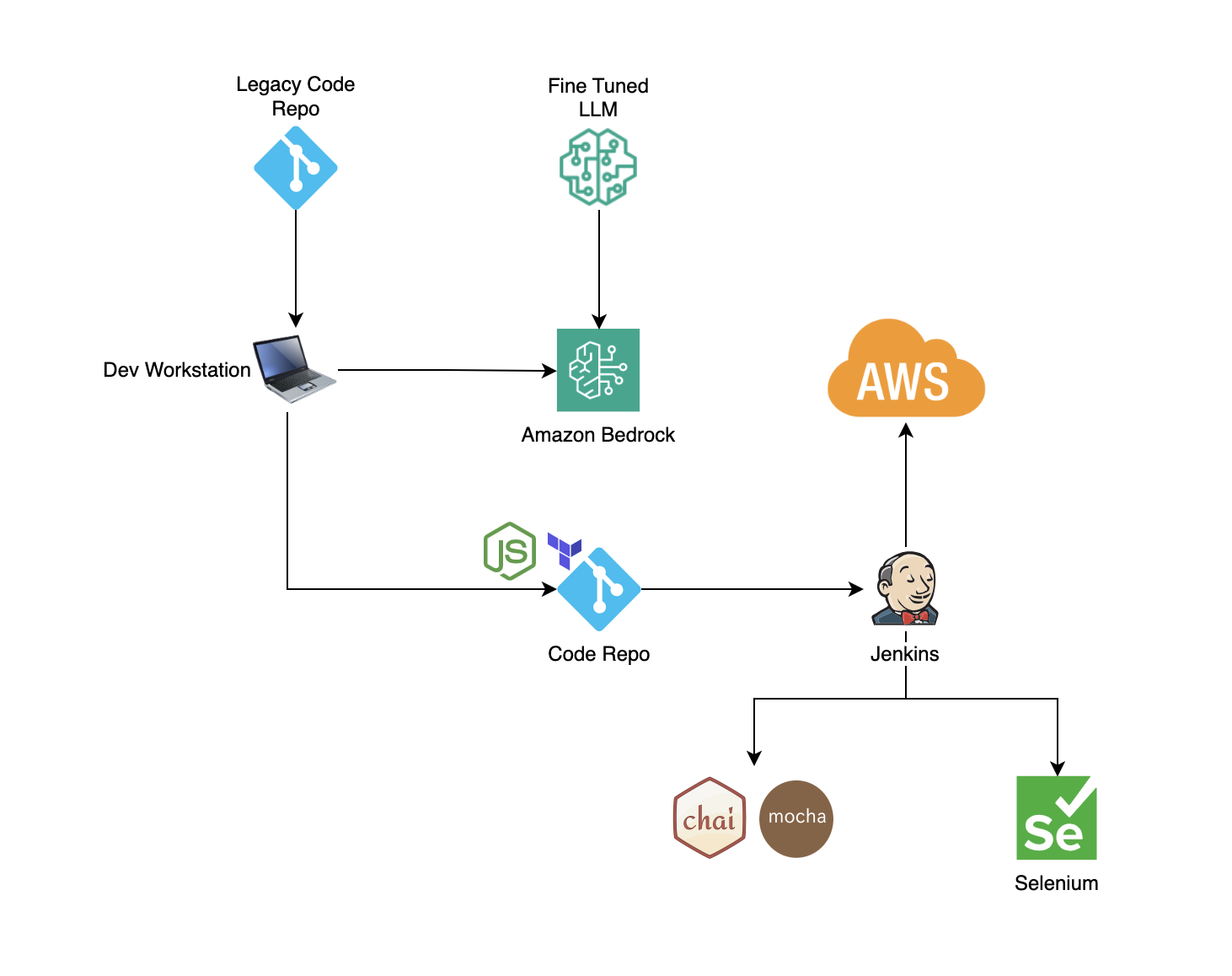

- Analyze the Existing Codebase: Our ‘ReCodeAI’ process starts by cataloging the existing codebase. We use a custom fine-tuned LLM model running on Amazon Bedrock to process all the files in the existing codebase to create an external and internal dependency matrix. We also prepare signatures of all the functions in the existing codebase.

- AI-Powered Code Generation: For each source code file in the current repo, migratai creates a custom prompt with the internal and external dependencies and function signatures. The prompt is sent to our LLM model on Bedrock to create code in the destination programming language. This approach ensures that the new code is not just a carbon copy of the old logic in a new language but a more efficient, cloud-native microservice.

- Expert Integration and Debugging: While our LLM model played a pivotal role, human expertise was crucial. Our team of seasoned engineers integrated and debugged the AI-generated code. This blend of AI efficiency and human expertise ensured that the new microservices were robust, scalable, and maintainable.

- Test script generation: Using our LLM model, migratai converts the existing test scripts to create a robust unit and regression test suites for the new code. If test scripts are not available, a new set of test scripts are created using the LLM model. Once again, LLM can only lay the foundation. Experts need to build on top to finish the job.

- Documentation: We used the LLM Model to create inline comments, README, and API documentation.

Results:

- Time and Cost Efficiency: We cut the implementation time by half leveraging Generative AI. This time efficiency translated directly into cost savings for our client.

- Improved Code Quality: The AI-generated code adhered to the best practices of modern Node.js development. This resulted in a higher quality of code, with improved performance and easier maintainability.

- Scalability and Flexibility: The new microservices architecture, powered by Node.js, provided the scalability and flexibility that the client needed. It allowed for easier updates, quicker deployments, and better fault isolation.

The journey from a monolithic legacy system to a Node.js microservices architecture, assisted by Generative AI, was a milestone project for us. It proved that with the right tools and expertise, even the most daunting legacy systems could be transformed into modern, efficient, and scalable solutions. As we continue to explore the possibilities of AI in cloud migration and software development, we are excited about the prospects and the value it brings to our clients.